Software Defined Networking for Community Network Testbeds

As a closing post on this effort I present you the following contributions:

Poxy: A proxy for the OpenFlow controller – OpenFlow switch connection

Pongo: An POX application, integrated with Django, which allows handling of the topology of a mesh of nodes (currently customized for the Community-Lab testbed).

My thesis titled “Software Defined Networking for Community Network Testbeds” can be found here.

A paper titled “Software Defined Networking for Community Network Testbeds” in the 2nd International Workshop on Community Networks and Bottom-up-Broadband(CNBuB 2013).

Here you can find the presentation slides.

Thanks for following. Comming up more work about Community Networks.

Take care.

Fast start with Open vSwitch and POX controller in Debian Wheezy

Instructions mostly from the Open vSwitch Readme

Installation

// Delete all linux bridges that exist.

// then remove the bridge module

root@debian:/# modprobe -r bridge

// Install common and switch packages

root@debian:/# aptitude install openvswitch-switch openvswitch-common

// Install and build the ovs module using the DKMS package

root@debian:/# aptitude install openvswitch-datapath-dkms

Initialization

// Load the ovs module

root@debian:/# modprobe openvswitch_mod

// Create the configuration database

root@debian:/# ovsdb-tool create /var/lib/openvswitch/conf.db /usr/share/openvswitch/vswitch.ovsschema

// Start ovsdb-server without SSL support

root@debian:/# ovsdb-server --remote=punix:/var/run/openvswitch/db.sock --remote=db:Open_vSwitch,manager_options --pidfile --detach

// The next command should be run the firt time a database is created

// with the ovsdb-tool as we did above. It initializes the db

root@debian:/# ovs-vsctl --no-wait init

// Start ovs daemon

root@debian:/# ovs-vswitchd --pidfile --detach

// Create a bridge

root@debian:/# ovs-vsctl add-br br0

And we are ready to go!

Integrate POX with OVS

// Get Pox and move to the betta -current stable branch -

user@debian:~/$ git clone http://www.github.com/noxrepo/pox

user@debian:~/$ cd pox/

user@debian:~/pox/$ git checkout betta

// Start an empty controller, without templates where rules have to

// be added manually

user@debian:~/pox/$ ./pox.py samples.pretty_log

// Go in another terminal

// Define the switch's policy if connection with the server is lost

// standalone or secure, see ovs-vsctl manual

root@debian:/# ovs-vsctl set-fail-mode ovs-switch standalone

// Define the controller address to the switch

root@debian:/# ovs-vsctl set-controller br0 tcp:0.0.0.0:6633

Now everything is set up. Have fun 😛

Brief notes on Batman-adv

Theoretical Ideas

Batman-adv is a distance-vector mesh routing protocol which acts completely on L2 (or 2.5 as it is said often).

- “Batman-advanced operates on ISO/OSI Layer 2 only and uses and routes (or better: bridges) Ethernet Frames. It emulates a virtual network switch of all nodes participating. Therefore all nodes appear to be link local, thus all higher operating protocols won’t be affected by any changes within the network.” [3]

I guess that the topology info for the vis-server is propagated using the protocol so does not add significant overhead - “In dense node areas with low packet loss B.A.T.M.A.N. III generates quite some packets which increases the probability of collisions, wastes air time and causes more CPU load. Each OGM being 20 Bytes small, B.A.T.M.A.N. IV introduces a packet aggregation that combines several distinct OGMs into one packet.” [1]

But still the problem exists in dense node areas no matter the aggregation since it is inherent of dealing with the environment. - “A virtual interface is provided (bat0), which can be considered as a usual ethernet device (probably with a little more packet loss 😉 )” [1]

- “From a couple of years B.A.T.M.A.N. has moved from a layer 3 implementation to a layer 2 one (2.5 would be more correct as it resides between the data-link and the network layer running in kernel and not on NICOS but using MAC addresses) without changing the routing algorithm. B.A.T.M.A.N. now represents a new level in the network stack, with its own encapsulation header and its own services. It became totally transparent to the IP protocol since it now works like an extended LAN with standard ethernet socket exposed to the IP layer.” [2]

- “at the IP layer, every packet sending operation is seen as a one-hop communication” [2]

Would we need a L3 protocol then, in case there is no communication with the external world (other networks)?

Technical Facts (that were usefull to me)

- The batman-adv module on the debian wheezy repo uses the built 2011.4.0 which runs the Batman-IV routing algorithm.

- Batman-adv module cannot be loaded separately inside LXC for each container because cannot insert modules so it can be simply associated to a namespace. Therefore, the virtual interface should be created in the host and be used somehow by all the containers. Specific integration with from the part of batman-adv module does not exist.

- Upto the version 2013.2.0 (current) vis functionality was part of the kernel module. This was changed as mentioned here: “This is replaced by a userspace program, we don’t need this

functionality to bloat the kernel.”

Evaluation

In 2010 it had problems with node-failure, while 802.11s dealt very good.[1]

“We can note that batman-adv is generally more stable.”[1](than 802.11s in terms of links)

“networks performance decreases sharply after two hops for both voice and Ethernet sized packets.” [2]

“BATMAN was proven to work as well in an environment with significant interference and longer distances between nodes as in a friendlier setting. In contrast, AODV often failed to provide stable routes in cases where multi-hop communication was necessary”[3]

Since it’s third version (v.2011.3.0) batman-adv has much improved performance.[4] (after the evaluation papers were written )

Batman can be used for any kind of interface.

[1]: Experimental evaluation of two open source solutions for wireless mesh routing at layer two, Paper(batman-adv vs open80211s)

Final thought

Finally, I want to share with you a interesting article, presenting a different motivation beside mesh-netorking: Mesh networking with batman-adv

Ultra-short LXC troubleshooting

Not mounting the directories

The sys_admin capability should be enabled in order for the filesystem to be mounted correctly when the container boots.

Not connecting to the console and respawing

The ttys should be allowed in the config file.

An example working config file can be found here

Looking at the file rootfs/etc/inittab the tty device that is spawned after the initialization can be found. In order to start tty1 and tty2 something like this should exist:

1:2345:respawn:/sbin/getty 38400 tty1

2:23:respawn:/sbin/getty 38400 tty2

These ttys should also exist in the roots/dev folder or should be created:

root@sth#mknod -m 660 dev/tty1 c 4 1

root@sth#mknod -m 660 dev/tty2 c 4 2

Then a connection to the console should be started:

lxc-console -n myfirstcontainer -t 2 # Number of tty

Remote Mininet VM with Virtualbox in Debian Host

Mininet before version 2.0 did not come with an easy way to reach the Internet through the mininet VM. Mininet 2.0 VM comes by default with connection to the network of the host, overriding this problem.

I am now going to describe how to invoke mininet to a remote Debian host through ssh and rdesktop using VirtualBox in a headless mode. Virtualbox is assumed to be installed.

-Get Mininet image (>2.0)

# Download Mininet 2.0 ubuntu image

wget https://github.com/downloads/mininet/mininet/mininet-2.0.0-113012-amd64-ovf.zip

# Extract

unzip mininet-2.0.0-113012-amd64-ovf.zip

-Prepare Virtualbox and import VM

# Get and install vbox extension pack for vrde support (remote desktop)

# Get a version corresponding to your vbox version

wget http://download.virtualbox.org/virtualbox/4.2.12/Oracle_VM_VirtualBox_Extension_Pack-4.2.12-84980.vbox-extpack

vboxmanage extpack install Oracle_VM_VirtualBox_Extension_Pack-4.2.12-84980.vbox-extpack

# Import vm, the name of the vm will be "vm"

vboxmanage import mininet-vm.ovf

# Turn vrde on

vboxmanage modifyvm vm --vrde on

# Set authentication using hosts credentials

vboxmanage modifyvm --vrdeauthtype external

# Start vm with vrde running in port 5001

vboxheadless --startvm vm --vrde config

-Connect through with rdesktop through Linux client

# Connect to the vm with host IP (1.2.3.4), username and password

rdesktop -a 16 -u user -p password -N 1.2.3.4:5001

To connect with the windows client mstsc.exe a .rdp should be created which should contain the username and password (help).

-Enable ssh

# Enable ssh to port 8082

vboxanage modifyvm vm --natpf1 "ssh,tcp,,8082,,22"

Now mininet is ready for remote use through remote desktop or ssh ;-).

A hands-on intro to OpenFlow and Open vSwitch with Mininet (Part I)

In this post I will provide some notes on the OpenFlow tutorial using Mininet.

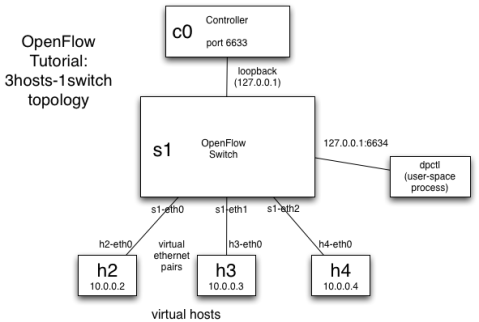

The topology used in the tutorial is the following (the controller is external to mininet):

Controller Startup

We start the Openflow reference controller with the command:

$ controller ptcp:

This will create the following series of messages to be exchanged.

You can get a copy of a similar capture from here. The capture can be viewed in wireshark using the Openflow Wireshark dissector (Helpful guide).

Continuous communication problem.

Then, the controller and the switch continue exchanging frequently Echo Request and Echo Reply messages.

According the Openflow specification[1] :

In the case that a switch loses contact with the

controller, as a result of a echo request timeout,

TLS session timeout, or other disconnection, it

should attempt to contact one or more backup

controllers.

This can prove to be a problem for our case. How this is implemented by the different controllers, as well as the frequence and its possible modification in a proactive environent should be explored. The tutorial mentions about

POX that:

When an OpenFlow switch loses its connection to a

controller, it will generally increase the period

between which it attempts to contact the controller,

up to a maximum of 15 seconds. Since the OpenFlow

switch has not connected yet, this delay may be

anything between 0 and 15 seconds. If this is too

long to wait, the switch can be configured to wait

no more than N seconds using the --max-backoff

parameter.

Also useful is this post in the ovs-discuss list.

Flow Modification

Since the switch flow tables are initially empty a ping command will cause the following message exchange.

You can get a copy of a similar capture from here.

We can see the Packet In,Packet Out and Flow Mod messages.

Flow removal messages do not appear as mentioned in the tutorial. This may be a problem with the OVS version (1.2.2). Additionally, this version does not support the send_flow_rem flow option in through the ovs-ofctl tool and neither is this option supported by the dpctl provided by the OF implementation. Thus, there is no way to check which is the actual problem.

User-space OVS vs kenel-space OVS

Following the tutorial we can see that using kernel-space OVS bandwith (measured with iperf) is arround 190 Mbps while with user-space OVS is arround 23Mbps.

Note: Mininet Internet Connection

By default, the mininet vm comes without connection to the host network. This happens because the guest network is implemented with the virtio paravirtualization driver.

Up Next…

There is going to be a post following, which will contain notes from the rest of the tutorial using POX controller in order to implement L2 and L3 learning.

[1]: http://www.openflow.org/documents/openflow-spec-v1.0.0.pdf

Initial CONFINE design

The following information are notes adapted from the deliverable Initial system software and services of the testbed of the CONFINE project.

Node Architecture:

Early Dilemma:

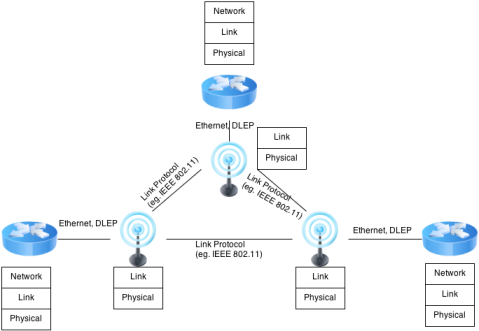

1. Deploy hardware that is known to be stable long time (mostly concerning the outdoors conditions faced) and cut CPU performance

2. Deploy hardware with reasonable CPU performance and virtualize Wi-Fi interfaces using the DELP protocol developed in the CONFINE project.

Finally the second option was chosen (focus on researcher’s needs).

Confine Node Software

- LXC for isolation and virtualization of sliver systems

- Linux Cgroups for ensuring local sliver isolation

- Linux TC and Qdisc tools for controlling sliver network load

- VLAN tagging and Linux firewall tools to ensure network isolation

- Public interface: L4 and up -> ebtables

- Isolated interface: L3 and up -> VLAN

- Raw Interfaces: Layer 1 and up -> physically separeted, not yet supported

- Passive Interface: capture realtime traffic -> anonimity problem? not yet supported

- Tinc for providing the management overlay network

Development Cycles

First Iteration: “A-Hack”

Implements node internal data structures and functions for executing node and experiments management tasks.

Second Iteration: “Bare-Bones”

Reuses the outcome of the first development cycle as backend functions. Once completed, it will contain the full node design and management API.

Node & Sliver Management Functions

- node_enable/disable: cleanly activate/deactivate participation of the RD in the testbed. Reads node and testbed specific config from

/etc/config/CONFINEand/etc/config/CONFINE-defaultsto set up: hostname, ssh public keys, tinc mgmt net, br-local, br-internal, dummy testing LXC container - sliver_allocate: allocate resources on an RD necessary to execute an experiment

- sliver_deploy: set-up sliver environment in an RD (What’s the difference between allocate and deploy???)

- sliver_start/stop/remove: create VETH iface, install ebtables, tc, qdisc, boot LXC container, set-up cgroups, set-up management routes

Open Question – Openflow

Layer 2 experiments are considered by supporting the

OpenFlow standard and other Software-Defined Networking

(SDN) possibilities enabled via the Open vSwitch [OvS]

implementation by allowing researchers the registration

of a switch controller. Therefore Open vSwitch has been

ported to OpenWrt but further investigations and

development efforts are needed to clarify the management

and integration of this architecture into the CNS.

Note about VCT

The virtual RDs are linked with their local interface to a local bridge (vct-local) on the system hosting the VCT environment. Further bridges are instantiated to emulate direct links between nodes (vct-direct-01, vct-direct-02, …).

By connecting the vct-local bridge to a real interface it is possible to test interaction between virtual and real CONFINE nodes (RDs). This way VCT can also assist in managing real and physically deployed nodes and experiments.

OMF Integration

In the CONFINE project, a custom testbed management

framework has been developed.However, to allow

interoperability with existing tools and to avoid

a steep learning curve for researchers, the project

has also developed an OMF integration.

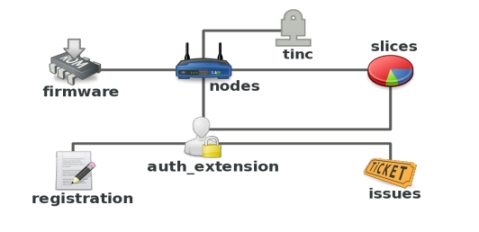

nodeDB or CNDB or commonNodeDB

For community wireless networks (CWNs), a node database

serves as a central repository of network information.

It comprises information about nodes deployed at certain

locations, devices installed at these locations,

information about internet addresses, and — in

networks that use explicit link planning — links among

devices.

[...]

Historically, each community network has built their

own node database and corresponding tools. There was

some effort to come up with a common implementation of a

node database but so far none of these efforts has seen

deployment beyond one or two installations.

[...]

To get a better understanding of the common

requirements of community wireless networks and to

eventually facilitate interoperability, the community

network markup language (CNML) project was founded in 2006.

To date we know of an implementation by guifi.net and

another similar XML export by Athens Wireless

Metropolitan Network (AWMN).

Common Node Database:

To avoid duplicating data in the community network database

and the research database, the common node database

implementation was started.

Some possible applications that nodeDB will enable are: IP address registry and allocation, Map, Link planning, Auto configuration of devices. Also an common DB approach will ebable the investigation of experimental features like: Social networking functions, Federation, Services offered by the network or by users.

Testbed Management Implementation

The management software is implemented based on Django.

Federation

Horizontal or network federation:

Interconnection of different community networks at the

network layer (OSI layer 3) so that a testbed can span

several disconnected networks, i.e. so that testbed

components in different networks can be managed by the

same entity and they can reach each other at the network

layer.

Vertical or testbed federation

Given a set of different, autonomous testbeds (each one

being managed by its own entity), the usual case is that

the resources and infrastructure in any of them is not

directly available to experiments running in other

testbeds because of administrative reasons (e.g.

different authorized users) or technical ones (e.g.

incompatible management protocols).

Usefull Ideas

- Community-Lab is a testbed deployed based on the CONFINE testbed software system.

- Dynamic Link Exchange Protocol (DLEP) is a proposed IETF standard for protocol for radio-router communication. The intention is to be able to transport information such as layer 1/2 information from a radio to a router e.g. over a standard ethernet link. Depending on the final feature-set the router might even be able to (re-)configure certain settings of the radio. The general use of these protocols is to provide link layer information to the L3 protocols running on the router.

- Process control group (Cgroup) is a Linux kernel feature to limit, account and isolate resource usage (CPU, memory, disk I/O, etc.) of process groups. One of the design goals of cgroups was to provide a unified interface to many different use cases, from controlling single processes (like nice) to whole operating system-level virtualization (like OpenVZ, Linux-VServer, LXC).

- cOntrol and Management Framework (OMF) is a control and management framework for networking testbeds.

- Community Network Markup Language (CNML) is a project of people working on an open and scalable standard for local mesh networks, also referred to as local clouds. It is the aim of the project to define an ontology which acts as the basis for the set up and operation of mesh clouds.

- nodeDB or CNDB is A database where all CONFINE devices and nodes are registered and assigned to responsible persons. The nodeDB contains all the backing data on devices, nodes, locations (GPS positions), antenna heights and alignments. Essentially anything which is needed to plan, maintain and monitor the infrastructure.

Related Links

Virtual Confine Testbed (VCT)

What is VCT

Virtual Confine Testbed (VCT) is an emulator of an actual CONFINE testbed environment. VCT is an easily deployable resource satisfying many goals simultaneously. For the end-user researcher, VCT is a platform through which he can get a quick overview of the function of a CONFINE testbed, familiarize with the environment as well as proof-test experiments destined for the CONFINE testbed. For the future CONFINE developer, VCT facilitates familiarization with CONFINE research devices (RD) and their behaviour, as an actual CONFINE OpenWrt image is used for the virtual nodes.

VCT Implementation

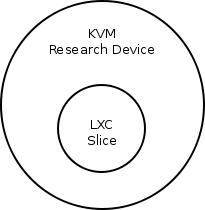

VCT uses two levels of virtualization as it creates virtual nodes (RDs) that contain slices belonging to experiment slivers. The actual RDs use Linux Containers (LXC) to run the slices. Thus, we need a virtualization layer that will surround the slice containers and will run the virtual node. This is achieved using KVM. Thus the two layers of virtualization are: KVM nodes and inside the slice containers, as seen in the image below.

All the scripts are softlinks to the main script vct.sh. The scripts are actually implemented as functions in vct.sh where the main function redirects to the corresponding function after checking the name of the executable.